Faculty Research Symposium

Social Sciences Faculty Research Symposium

The Social Sciences Faculty Research Symposium is a semesterly event designed to introduce attendees to a specific research topic, the scholarship of our faculty, and the process of social-science research, more generally.

Crossing Academic Borders: Transglobal Justice Issues and Online Collaborations with CETYS-Mexico

Professors Bordoni, DeMarco, Sylla, and Resko of the Department of Social Sciences at QCC-CUNY with Professors Palacios (CETYS-Mexicali), Tapia (CETYS-Mexicali), and Madrid (CETYS-Tijuana)

Wednesday, March 12, 2025 from 12:10-1pm in LB29

During spring semester 2021, four faculty members from the QCC Social Sciences Department met to develop a transglobal online project with John Jay College’s Latinx Studies Department and CETYS-Mexico, a private university with campuses in Tijuana, Mexicali, and Ensenada. Professor Adrian Bordoni, Professor Mike DeMarco, Dr. Jody Resko, and Dr. Keba Sylla recruited students from their Criminal Justice and Psychology classes to meet for several sessions to share their critical analysis of documents discussing issues of violence, stereotypes, transborder issues, and immigration policies. The application of High Impact Practices set the tone for students to debate and find common experience around these complex issues. Inspired by the Common Read book, King of the Armadillos, by Wendy Chin-Tanner, this presentation included observations by CETYS and QCC faculty, who discussed their participation in a week-long conference in September 2024 in Mexico, CETYS research and intervention programs, and support for non-profits working with asylum seekers and refugees.

Teaching Sociology of the Arts at an Urban Community College during the Pandemic: Reflections on Structure, Agency and Community Engagement

Julia Rothenberg, Associate Professor of Sociology, QCC-CUNY

Wednesday, October 30, 2024 from 12:10-1pm in LB29

Dr. Julia Rothenberg’s Biography

In response to the challenges of online teaching during the Covid 19 pandemic, Dr. Julia Rothenberg developed a semester-long assignment around an immersive community-based research project in which students examined the impact of the pandemic on several New York City community-based arts organizations. In this presentation, Dr. Rothenberg will address how the project encouraged new and old questions about how to teach Sociology of the Arts to students whose cultural capital is not compatible with conventional academic rewards systems. She will also reveal how challenges posed by teaching sociology of the arts during troubling times prompted her to systematically evaluate the contributions of two seemingly incompatible pedagogical approaches to teaching sociology: the tradition of humanist sociology and the more deterministic perspective on social reproduction, education, and culture offered by the French Sociologist Pierre Bourdieu. Finally, Dr. Rothenberg will discuss the implementation, challenges, and successes of this experiential learning project, as well as the impact this experience had on her own thinking.

Navigating the Storm: Resilience and Psychological Well-being of EMS Providers Amidst the COVID-19 Pandemic

Dr. Celia Sporer, Assistant Professor of Criminal Justice, QCC-CUNY

Wednesday, March 13, 2024 from 12:10-1pm in LB29

The COVID-19 pandemic profoundly impacted society, with EMS providers navigating its challenges firsthand. Facing a novel virus and escalating call volumes, they stood out from other healthcare workers. While many faced increased stress and psychological issues, EMS providers showcased resilience. Exploratory research conducted during the pandemic's peak highlighted EMS providers’ balanced well-being, emphasizing their proactive response and view of the crisis as a growth opportunity. In this presentation, Dr. Celia Sporer, Assistant Professor of Criminal Justice at Queensborough Community College-CUNY, will present her findings from a follow up study, which reaffirms EMS providers' exceptional resilience and underscores their unique psychological dynamics. She will also underscore how, despite their distinct challenges and roles as both first responders and healthcare providers, tailored support for this essential group remains lacking. Dr. Sporer will also emphasize the need for targeted research and interventions to better support this vital frontline healthcare workforce.

Tossing the Test: Using Renewable Assignments to Increase Student Engagement

Dr. Jody Resko, Assistant Professor of Psychology and Education, QCC-CUNY

Wednesday, November 1, 2023 from 12:10-1pm in LB29

In this presentation, Dr. Jody Resko, Assistant Professor of Psychology and Education at Queensborough Community College-CUNY, will present an aspect of her pedagogical research on student engagement. According to the literature, students are either willing or unwilling learners depending on how they are taught and they are likely to differ in the instructional techniques that they find most engaging (Komarraju and Karau, 2008). As a result, instructors are often searching for ways to develop assignments that are interesting and relevant for their students. Both Universal Design for Learning (UDL) and Open Pedagogy are two ways to engage students (Smith, 2012; Seraphin et al., 2019). In Introductory college courses, instructors often rely on disposable assignments (e.g., quizzes, papers). Renewable assignments have been recommended as one way to increase student engagement and ultimately promote a deeper level of learning (Seraphin et al., 2019). Dr. Resko will add to this literature with results that show how students who completed renewable assignments were more engaged than those who completed traditional quizzes. These findings suggest that renewable assignments are an effective tool for increasing student engagement and may ultimately lead to better learning outcomes.

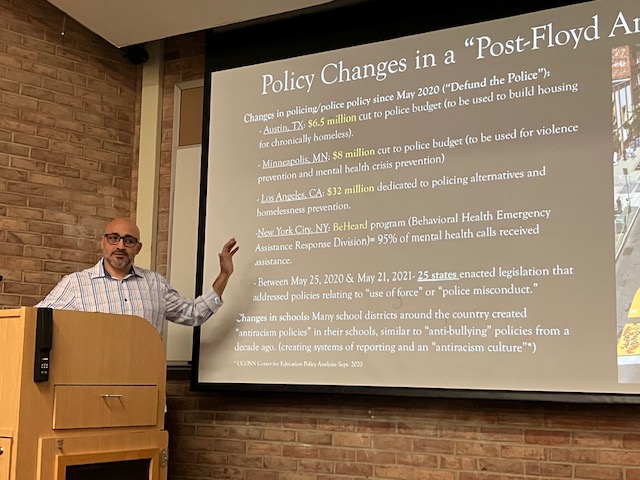

An Earnest Struggle: Antiracism in a “post”-Trump America

Dr. Trevor Milton, Associate Professor of Sociology and Criminal Justice

March 15th, 2023 from 12:10-1pm in LB29

In this symposium, Dr. Trevor Milton, Associate Professor of Sociology and Criminal Justice, will present on his direction of An Earnest Struggle, a documentary film that focuses on the roots of institutional racism in the United States, modern forms of that racism, and four community-based organizations that are struggling to dismantle that racism. Dr. Milton will highlight his findings on the work of antiracism organizations and the techniques they use to inch closer to the goal of “ending racism.”

Looking Expensive: Experts’ Valuation of Paintings is Influenced by Context

Dr. Qin Li, Assistant Professor of Psychology

October 26, 2022 12:00 PM - 1:00 PM

Video of recorded event to come

In this presentation, Dr. Qin Li will present research on the valuation of paintings. Many factors influence the price of art work, with opinions of experts and the reputation of the artist weighing heavy on price outcomes. Few studies have focused on context as an influence on price despite its impacts on how people experience and evaluate art. Dr. Li ’s research looks at key factors li ke expertise and context to determine their influence on price. More specifically, Dr. Li ’s research tests whether novices, quasi-experts, and experts price artwork differently if setting is manipulated, and it looks at how style and reputation of artwork affect their value. Contrary to expectations, Dr. Li ’s research finds that experts are most influenced by setting manipulation when determining prices, despite their reported familiarity with the established paintings shown. Experts and quasi-experts are also more li kely than novices to price paintings according to reputation. Dr. Li ’s research also finds a significant three-way interaction between expertise, reputation, and style, whereby those with greater expertise are aptly influenced by reputation and style when pricing art.

Juvenile Justice Policy Goals: A Qualitative Inquiry into Purpose Clauses

Dr. Emily Pelletier, Assistant Professor of Criminal Justice, QCC-CUNY

March 9, 2022 12:00 PM - 1:00 PM

Dr. Emily Pelletier's Biography

In this presentation, Dr. Emily Pelletier, Assistant Professor of Criminal Justice at Queensborough Community College, will discuss juvenile justice systems across the US. While these systems have a common history of rehabilitative ideals and Constitutionally required due process protections, each state maintains the responsibility to create and amend state statutes governing its juvenile justice system. Differences among state statutes and statutory changes over time give rise to the inquiry of whether juvenile justice systems in the US hold similar goals and what these goals specifically entail. This presentation will identify thematic goals of state juvenile justice systems in the United States using data from a qualitative content analysis of the purpose clauses in state juvenile justice legal codes.

Weakening the Immigrant Children’s Rights During the Trump Administration

Dr. Gabriel Lataianu, Assistant Professor of Sociology, QCC-CUNY

October 20, 2021 12:10 PM - 1:00 PM

Dr. Gabriel Lataianu's Biography

In this presentation, Dr. Gabriel Lataianu, Assistant Professor of Sociology at Queensborough Community College, will discuss the Trump administration's family separation policy and subsequently proposed Family Detention Centers. He will also shed light on how these policies bluntly violated the Flores Agreement, the most important legal act protecting immigrant children in the U.S., leaving thousands of immigrant children in legislative limbo as apprehended minors are protected neither by the U.S. Constitution nor the U.N. Convention on the Rights of the Child (the U.S. being the only U.N. member that is not part of the Convention).

What Could a Robot Know About? The Discovery of the Mind in Language

Dr. Patrick Byers, Assistant Professor of Psychology, QCC-CUNY

March 17, 2021 12:00 PM - 1:00 PM

The development of deep neural networks (DNNs) has significantly advanced artificial intelligence, with machines now able to carry out complex tasks that, in some cases, appear to exceed human ability. However, the underlying operation of DNNs is opaque (not readily interpretable), and—despite being generally reliable--prone to somewhat unpredictable and possibly serious errors. This has resulted in significant efforts to develop systems that can provide meaningful explanations of their functioning, so-called “explainable AI”. Such systems would account for their behavior like human beings do, i.e., in terms of reasons involving beliefs/knowledge, attitudes and/or desires. Discourse analysis work reveals profound challenges facing these efforts. Ascriptions of what others know/believe, think, feel or want have a clear meaning only in relation to certain assumptions about how people can be expected to behave (and not behave). These assumptions are prominently reflected in judgments about whether a person’s behavior reflects genuine understanding, or merely rote training. A number of recent cases of DNN behavior suggest that the assumptions in question do not hold for these systems.